Theoretical and iperf-based perfromance.

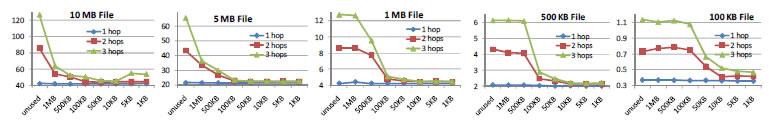

We have tested RAMP performance when transferring files while varying file size ([100KB, 10MB] range), path length (1, 2, or 3 hops), and bufferSize (disabled or in the [1KB, 1MB] range). To easily compare performance results, we have limited the bandwidth of each single-hop link to a maximum of 2Mbit/s.

Lower Bound Identification

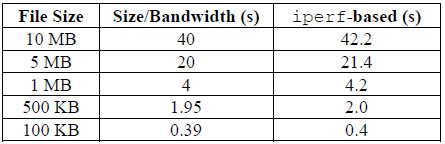

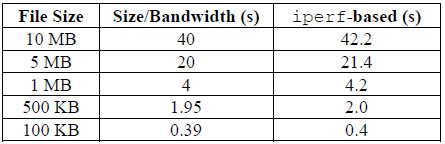

To better understand the reported results, we identify a lower bound transfer time, i.e., the time needed for file transfer over a traditional TCP/IP fixed network (layer-3 routing), experimentally determined via the iperf command (no notable differences have been observed while varying the hop number). The distance between RAMP performance and this lower bound also indicates the overhead of routing choices at the application layer. The table below summarizes iperf-based results and file_size/bandwidth ratios.

We have tested RAMP performance when exploiting a real-time video conferencing service in case of abrupt connectivity

interruption and dynamic video stream migration to a new path (Continuity Manager component activated). Our video

service exploits off-the-shelf VLCMediaPlayer to capture (at sender) and to play (at receiver) the video

stream from a regular webcam (25frame/s, resolution = 320x240). Data are MPEG2-encoded and transmitted as

Real-time Transport Protocol encapsulated in MPEG-Transport Streams.

Path Reconfiguration

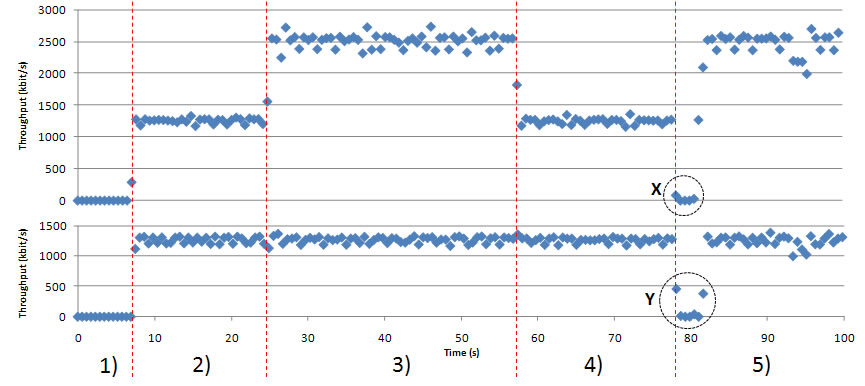

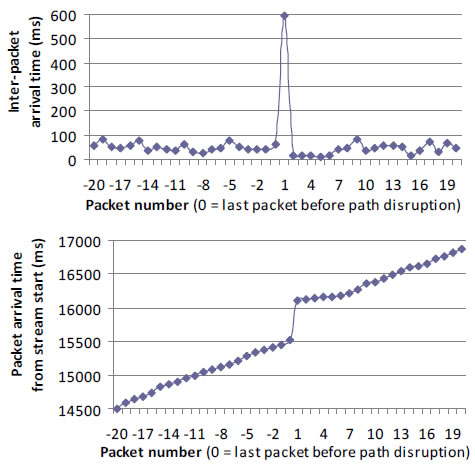

The figure below shows that RAMP avoids packet dropping by only delaying packets in case of path

disruption (at packet0 reception time). After path requalification, the delayed packets accumulated at the node performing path requalification are

immediately rerouted and rapidly reach the receiver, thus contributing to the reduction of perceivable quality

degradation (inter-packet arrival time rapidly returns to the usual fluctuating pattern). The number of delayed

packets has demonstrated to depend on two factors mainly: average packet interval and time for new path segment

determination. For instance, in the case of the figure, only 7 packets are delayed.

The RAMP middleware is able to intercept and re-cast multimedia streams flowing through intermdiate nodes (SmartSplitter component activated performing re-casting). Our video broadcasting service exploits off-the-shelf VLCMediaPlayer to capture (at sender) and to play (at receiver) the video stream from an RTP/MPEG-TS multimedia stream with MPEG2 video codec at 768kbps MPEG-1 Layer1 audio codec at 64kbps; the overall throughput requested for each stream (audio/video bitrate plus RTP/MPEG-TS/RAMP overhead) is about 1250kbps. To evaluate the performance of our middleware, we consider the following testbed: a multimedia streamer on NodeS provides a remote client (NodeC) with multimedia streams, while an intermediate NodeR behaves both as client and re-caster.

Stream Re-casting

To fully understand and quantitatively evaluate the dynamic behavior of our middleware, we have carefully investigated the case of re-casting de/activation while stream provisioning is in progress, in particular the transitory phases immediately before and after re-casting activation (see figure below). The reported results show NodeS (up) and NodeR (down) outgoing throughput with no receiver (interval 1), with one remote receiver (interval 2), with one remote receiver plus a receiver on NodeR (intervals 3, 4, 5), without re-casting activation (intervals 1, 2, 3, 5), and with re-casting activation (interval 4).